Scenario

You have Windows 2003 server (x86 or x64) with IIS 6.0, this is your development server, you have to test several of your web sites with SSL and all of these web sites happen to be on same domain name such as site1.mydomain.com, site2.mydomain.com, site3.mydomain.com …..

Disclaimer

I am not a Security/Certificate expert, but in this below blog I want to share my steps that have worked very well for the purpose of development to implement SSL certificates using SelfCert tool.

What can you do?

Instead of assigning individual certificates you can choose to implement wildcard certificates. such as getting certificate created for *.mydomain.com.

You can use SelfCert utility which is part of IIS 6.0 resource Kit tools.

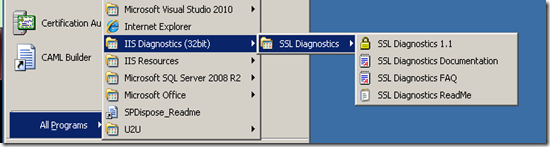

You can use SSL Diagnostic utility which is part of IIS 6.0 resource Kit to finally validate the certificate assignments to your site.

How to implement wildcard Self Certificate in Windows 2003 server with IIS 6.0?

High level steps:

- In IIS create all your web sites (Site1,site2,site3….)

- Use Selfssl to create wildcard certificate and assign the certificate to given existing web site on your IIS.

- Then export the certificate along with private key to .pfx file.

- From your local machine certificate store, import the certificate in to Trusted Root Certificate Authority.

- From IIS, for each of your Site, assign your newly imported wildcard certificate.

- From command prompt assign securebindings for each of your site.

- From your client Browser, add your wildcard domain name as trusted site.

Step 1: Create IIS Sites

Create your IIS Sites from the IIS first. You don’t have to assign host headers at this time. We will cover the host headers later.

Step 2: Create Certificate

First download and install the IIS Resource Kit. The utilities you choose will be installed at <SystemDrive>:\Program files[(x86)]\IIS Resources\

As first step lets first create a wildcard certificate for your domain name “*.mydomain.com” for example.

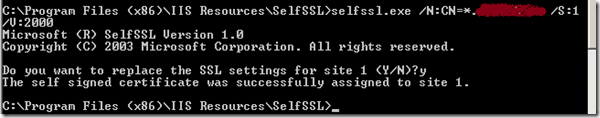

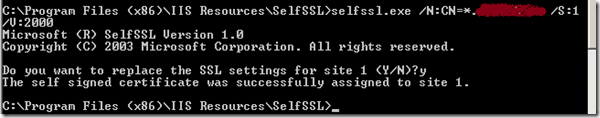

We will use the selfssl tool to create a wildcard certificate against any one of the IIS site. (We will apply the certificate to all sites later). Go to command prompt and navigate to the folder where the Selfssl utility is stored. You can try SelfSsl /? to see all the options available. Follow below syntax:

<SystemDrive>:\Program files[(x86)]\IIS Resources\>selfssl.exe /N:CN=*.<mydomain.com> /S:<IISSiteID>

Where substitute <mydomain.com> with your common domain name, and for <IISSiteID> substitute IIS Site ID as displayed in the IIS manager Identifier column. (Default site is always 1, user created sites will have large number)

Above step creates an wildcard certificate against your given site and stores the certificate under the Local Computer >Personal store.

Lets check this out:

From the command prompt run MMC. Add snap-in for Certificates, and choose My Computer.

Now navigate to the Console Root>Certificates (Local Computer)>Personal>Certificates. Here you should find your newly created wild card certificate along with the Machine Certificate and any other personal certificates.

Step 3: Export the certificate

Now lets export this certificate out along with the private key, so that in the next step we can import in to the Trusted Root Certificate.

You can export this certificate from two different spots. Either from the Certificate management console that we opened in the above step to verify or from the IIS manager over the IIS site where we had created the wildcard certificate against.

From either spot you can launch the Certificate Export wizard.

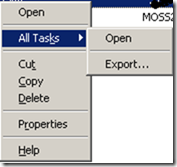

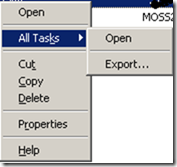

From Certificate management console, you can choose your wild card certificate, right click, All Tasks, Export.

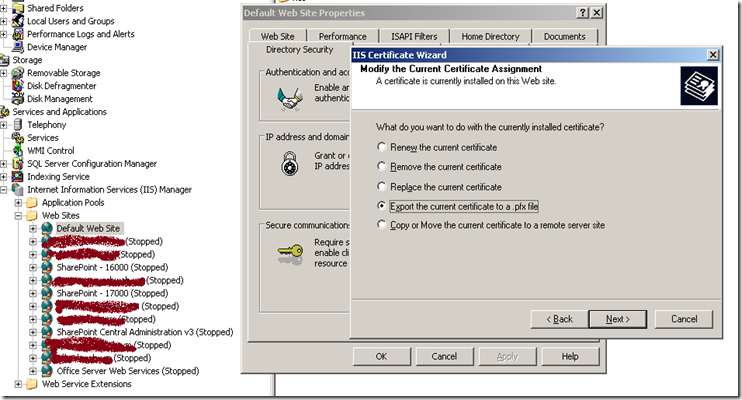

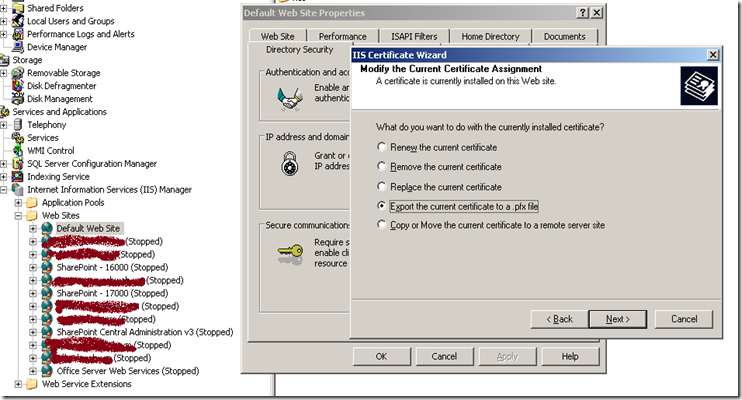

Or from IIS, right click over your site that you have used for selfcert command, Directory Security, Click on Server Certificate and choose “Export the current certificate to a .pfx file.

Choose a file name and local folder where you want to exported certificate to be saved. In the next step, provide password to protect the Certificate as you are also exporting the Private Key.

Step 4: Import certificate to Trusted Root Certificate Authority.

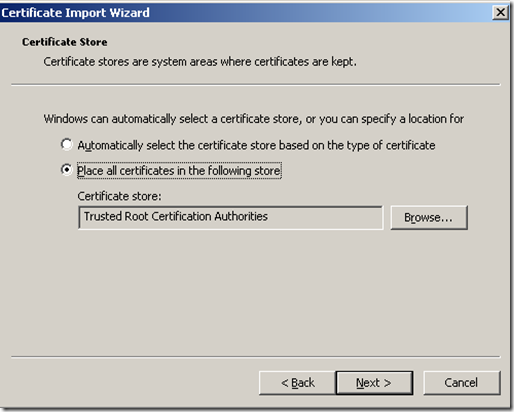

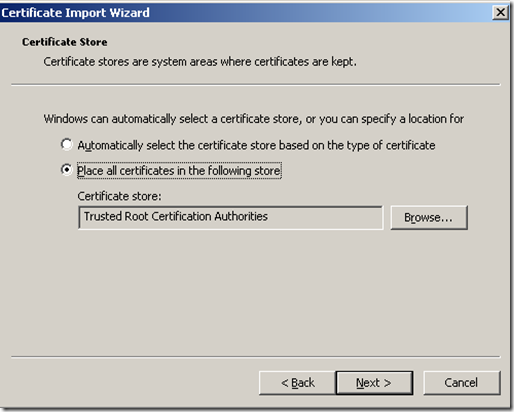

Now switch back to the Certificates management console. Navigate to the node “Trusted Root Certification Authorities>Certificates”.

From the Certificates node, right click and choose to import certificate.

Now choose the above saved certificate file to import, enter the password entered above to encrypt the certificate file.

Next choose to import the certificate as below.

Next confirm a successful import.

Step 5: Assign wildcard certificate from IIS

Now switch to IIS MMC. For each of your IIS sites, assign the newly acquired selfcert generated certificate. Leave the SSL port as is and the default port as is. The sites may be in stopped state and that is fine at this stage, since all of the sites are using the same default port 80.

Step 6: Assign securebindings.

Prerequisite for this step is that you have already installed the IIS Administrative Scripts located as below: (If not install from your Control Panel>Add Remove Windows Components>Choose IIS to reinstall the AdminScripts >

Now go to IIS MMC, note Identifier for each of your Sites that will require the new certificates to be assigned.

Go to command prompt and change directory to the above highlighted AdminScripts folder.

Enter following command for each of the IIS sites that you want the certificate is assigned.

cscript.exe adsutil.vbs set /w3svc/<site identifier>/SecureBindings ":443:<host header>"

where host header is the host header for the Web site, for example, site1.mydomain.com.

After above steps are completed, go back to IIS MMC and start each of your sites.

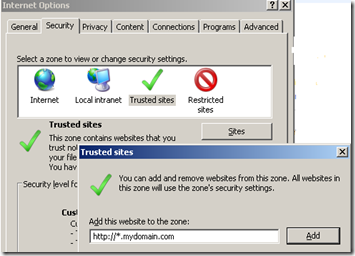

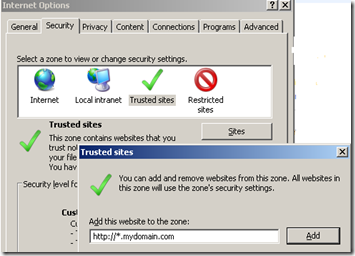

Step 7: Add to Trusted Sites

From your development server browser, add your wildcard domain name as trusted site. (*.mydomain.com)

Validation

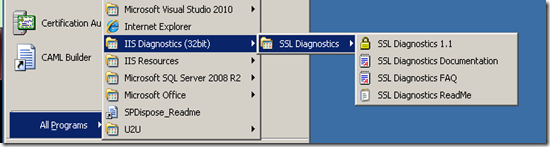

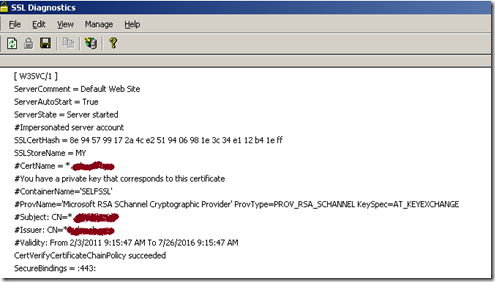

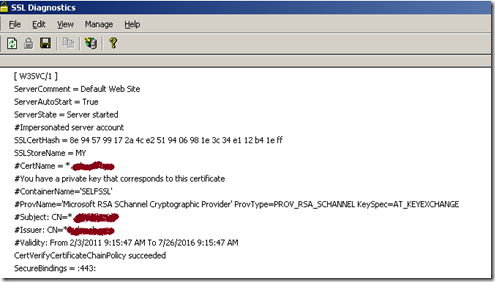

From the installation of IIS Resource kit you will also get SSL Diagnostic tool installed.

Run the SSL Diagnostics tool. The tool will check SSL certificate for each of the IIS sites and provide report as below.

In the above screen cap, I have masked the domain names I had used, but you should expect to see your respective wild card domain name.

Next browse to each of your IIS Sites from the Browse and you should be able to see your site without any certificate warning.

Hope these steps will simplify your SSL implementation for the purpose of development

Things to consider beyond just SSL

- Simulate SSL per site in your development environment.

- Address your browse warning for mixed content if present.

- Will update further if anything comes across my attention.